Setup and Configure Splunk in 2025 - Part Four in Cyber Lab Series

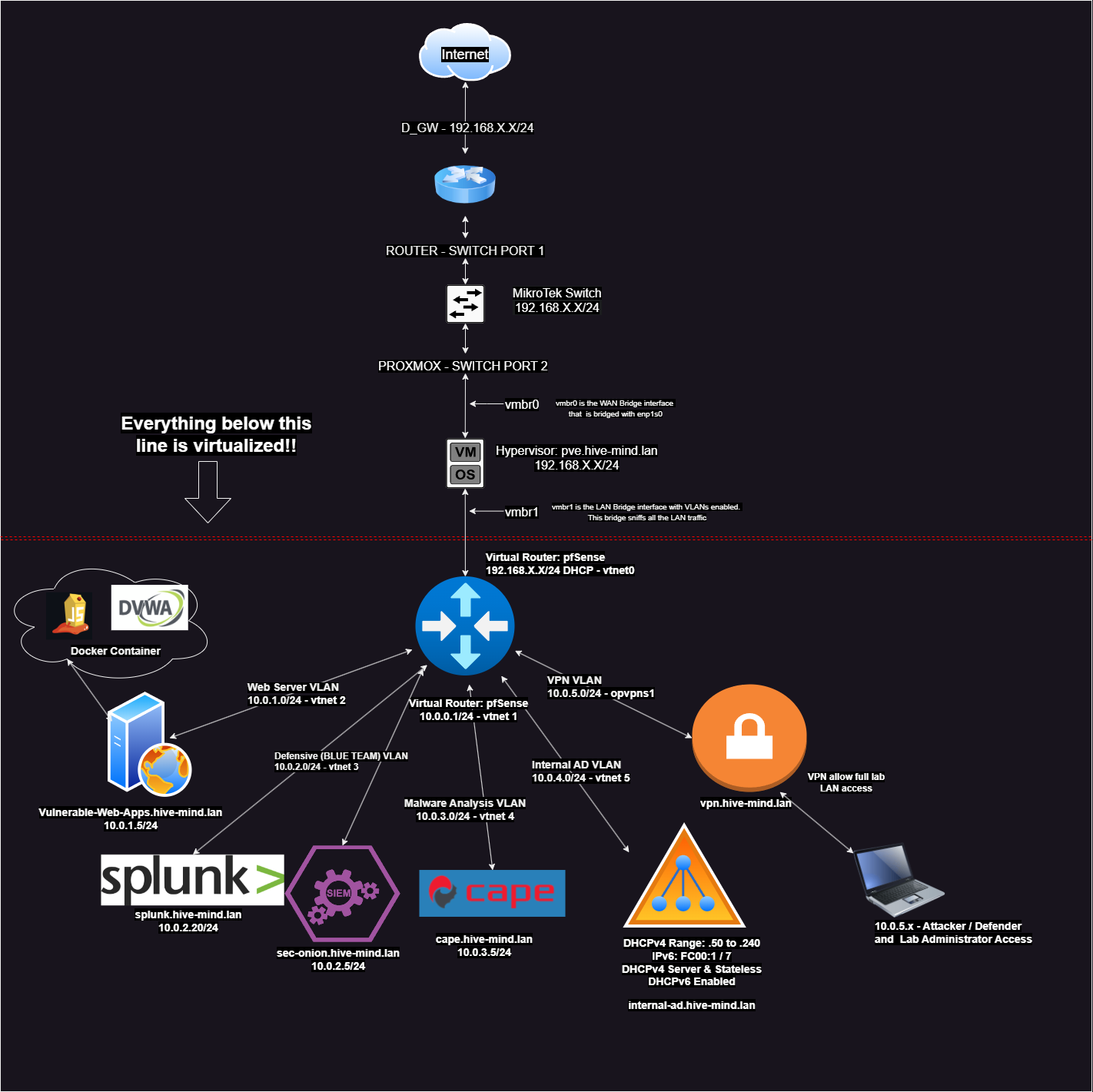

In our previous article we setup our SOC/SIEM combo with Security Onion. In this article we will setup the Splunk SIEM. Splunk is now alongside Security Onion in our blue team subnet.

Create Ubuntu VM (for Splunk Enterprise)#

Download the Ubuntu Desktop ISO: https://ubuntu.com/download/desktop/thank-you?version=24.04&architecture=amd64<s=true

Install Ubuntu Desktop with these minimum hardware requirements

Make sure your default Linux shell is “bash” as required by Splunk:

If your shell is something different as returned by echo $0 then change it to bash:

sudo dpkg-reconfigure [shell-name]

Curl is needed for Splunk to install properly:

sudo apt install curl

Improve Ubuntu VM Performance#

For improved Ubuntu VM performance, add virtio to /etc/initramfs-tools/modules

When you open (or create) the file with your favorite text editor, add these three lines:

virtio_pci

virtio_blk

virtio_net

What is VirtIO?#

VirtIO is a virtualization standard that provides paravirtualized device drivers to improve VM performance. It allows the guest OS (Ubuntu in this case) to efficiently communicate with the host’s hardware, reducing overhead and improving I/O performance.

Why Add These Lines to /etc/initramfs-tools/modules?

The file /etc/initramfs-tools/modules contains a list of kernel modules that

should be preloaded into the initramfs (initial RAM filesystem). Adding

these VirtIO modules ensures that they are loaded early in the boot process,

allowing the VM to use optimized virtualized drivers instead of slower emulated

hardware.

Explanation of Each Module:#

virtio_pci- Enables VirtIO devices over PCI. This allows the guest VM to use VirtIO-based virtual hardware.virtio_blk- Enables VirtIO block devices, improving disk I/O performance.virtio_net- Enables VirtIO network devices, enhancing network performance.

Then run sudo update-initramfs -u and reboot Ubuntu.

Enable SSH if you want to access your Ubuntu VM remotely:

sudo apt install openssh-server

sudo systemctl enable --now ssh

sudo systemctl status ssh

sudo ufw allow ssh

sudo systemctl start ssh

Setup Splunk Enterprise#

Scroll down to “Debian DEB installation” in the Splunk docs to see the Splunk installation instructions for yourself: https://docs.splunk.com/Documentation/Splunk/9.2.2/Installation/InstallonLinux

I used a wget command from the Splunk Enterprise downloads website on my Ubuntu

VM shell to get the Splunk Enterprise .deb file (the command may be slightly

different for you depending on the current Splunk Enterprise version, you can

copy my command with that in mind):

Download the Splunk

.debfilewget -O splunk-9.2.2-d76edf6f0a15-linux-2.6-amd64.deb "https://download.splunk.com/products/splunk/releases/9.2.2/linux/splunk-9.2.2-d76edf6f0a15-linux-2.6-amd64.deb"Install Splunk .deb file:

sudo dpkg -i splunk[version].debStart up Splunk at boot up of the Ubuntu VM:

sudo ./splunk enable boot-start -systemd-managed 1 --accept-licenseCheck the Splunkd service status

- Start “Splunkd” at boot (if not enabled from previous command)

sudo systemctl enable Splunkd

There may be permission issues within the /opt/splunk directory that may

prevent the Splunkd service from running, in that case ownership recursively to

the splunk service user for that directory:

[OPTIONAL]

sudo chown -R splunk:splunk /opt/splunkGo into the Splunk binary directory and start the Splunk service as

sudo:

cd /opt/splunk/bin

sudo ./splunk start

You should see this:

Open up your browser on the host machine (after you are connected with your pfSense VPN). Go to the Splunk machine IP at port 8000. For example on firefox I would type in:

http://10.0.2.20:8000Login and configure receiving

- Make sure port 9997 is enabled

Splunk Universal Forwarder - FreeBSD Deployment (pfSense)#

We will setup Splunk on our pfSense router to be thorough in getting all the logs that we need.

- Login to the pfSense Web Interface and go to Firewall Rules

- Click on

Add rule

- Rule settings below:

Action : Pass

Interface: LAN (Choose the interface that you want to monitor, I am monitoring my entire LAN)

Protocol: UDP

In the Destination Port Range field, enter 7001.

In the Description field, enter a meaningful name for the rule (e.g. Allow UDP traffic on port 7001 for Splunk).

Save the rule

Download here (requires login): https://www.splunk.com/en_us/download/previous-releases-universal-forwarder.html

- Create the

/optdirectory and then create thesplunkdirectory within it.

- Copy the

wgetcommand, modify it to curl (as seen below) and paste it in our pfSense terminal (if we have that capability) with root privileges.

curl -O "https://download.splunk.com/products/universalforwarder/releases/9.4.1/freebsd/splunkforwarder-9.4.1-2f7817798b5d-freebsd14-amd64.tgz"

I followed the instructions here, but I have replicated the instructions in this article too for continuance’s sake.

- Expand the

.tgzfile usingtarand install this within/opt/splunk

tar xvzf splunkforwarder<SNIP>.tgz

We should have something like this:

- Create the index configuration file in

/opt/splunk/etc/system/local

nano index.conf

[fw]

homePath = $SPLUNK_DB/fwdb/db

coldPath = $SPLUNK_DB/fwdb/colddb

thawedPath = $SPLUNK_DB/fwdb/thaweddb

What does coldPath and thawedPath mean?

In Splunk, data starts in the hot stage (actively written to and searchable), then moves to the warm stage (still searchable but read-only), and eventually to the cold stage as it gets older.

The cold stage is typically used for data that is accessed less frequently. It might be moved to slower, cheaper storage to save costs while still keeping the data searchable.

When data in Splunk reaches the end of its lifecycle (based on retention policies), it is frozen, meaning it is either deleted or archived (e.g., moved to an external storage system).

If you need to search that frozen data again, you can “thaw” it by bringing it back into Splunk. The thawedPath is where this restored data is stored.

Thawed data is typically read-only and can be searched alongside hot, warm, and cold data.

- Create an inputs configuration file

The inputs.conf file in Splunk is used to configure data inputs, telling Splunk

where to find data, how to monitor it, and how to process it. In this case

pfsense_inputs_fw, will ingest logs or data generated by a pfSense firewall.

The inputs.conf file specifies the data source, the index where the data will

be stored, and the type of data being ingested.

mkdir /opt/splunk/etc/apps/pfsense_inputs_fw

mkdir /opt/splunk/etc/apps/pfsense_inputs_fw/local

cd /opt/splunk/etc/apps/pfsense_inputs_fw/local

nano input.conf

[udp://:7001]

index=fw

sourcetype=pfsense

The goal here is to set up Splunk to ingest pfSense firewall logs via UDP on port 7001, store them in the fw index, and tag them with the pfsense sourcetype for proper parsing and searching.

- Restart Splunk Server

/opt/splunk/bin/splunk restart

- Enter Remote Logging information on your pfSense Dashboard.

Navigate to Status > System Logs > Settings

Scroll down to Remote Logging Options, then tick to enable Remote Logging.

Enter the IP Address of the Splunk server followed by port number on Remote log

servers. (e.g. : <Splunk IP>: 7001)

Click on Save to enable log forwarding to Splunk server.

Note the 10.0.2.5 IP is our Security Onion log server. 10.0.2.20 is our Splunk log server IP.

- VM Configuration on pfSense.

Go to your pfSense console shell and make sure you create these files.

To ensure that the forwarder functions properly on FreeBSD, you must perform some additional activities after installation. This includes setting process and virtual memory limits.

The figures below represent a host with 2GB of physical memory. If your host has less than 2 GB of memory, reduce the values accordingly.

Add the following to /boot/loader.conf

kern.maxdsiz="2147483648\" \# 2GB\

kern.dfldsiz="2147483648\" \# 2GB\

machdep.hlt_cpus=0

Add the following to /etc/sysctl.conf:

vm.max_proc_mmap=2147483647

Restart FreeBSD for the changes to take effect.

My pfSense installation had a memory of ~10GB, so converting that to

bits is: 1073741824

My /boot/loader.conf

kern.maxdsiz="1073741824" # ~10GB

kern.dfldsiz="1073741824" # ~10GB

machdep.hlt_cpus=0

My /etc/sysctl.conf

vm.max_proc_mmap=1073741823

I just echo’d the lines of text one at a time in my limited pfSense root shell into the files. Since vi sucks.

- Splunk Data Inputs

Go to Settings > Data Inputs on your Splunk Dashboard. Click + Add New next

to UDP and then put in 7001

- Restart our pfSense VM and Splunk VM (if needed).

We can see our firewall is successfully sending logs to Splunk!

Our LAN traffic is allowed and WAN traffic is dropped by pfSense. This is clearly shown in the logs.

Resources used for this section#

Hurricane Labs, setting up pfSense and Splunk

Jay Croos Article on Splunk and monitoring pfSense Logs

Splunk Universal Forwarder - Windows Deployment#

Windows Host Endpoint#

Go to: https://www.splunk.com/en_us/download/universal-forwarder.html

Create a free account

Download the forwarder

- Transfer the forwarder onto your Windows lab host

- Once installation is finished, check your Splunk Dashboard. You can connect through your OpenVPN on your Kali Box and access the dashboard from there as well.

We can see the Windows host is successfully sending events to our Splunk SIEM!

Windows DC#

- Open an administrative command prompt to run our

.msiscript.

- Download the Windows Server 2019

.msi

Download Universal Forwarder for Remote Data Collection Splunk: https://www.splunk.com/en_us/download/universal-forwarder.html?locale=en_us

wget -O splunkforwarder-9.4.1-e3bdab203ac8-windows-x64.msi

“https://download.splunk.com/products/universalforwarder/releases/9.4.1/windows/splunkforwarder-9.4.1-e3bdab203ac8-windows-x64.msi"

We can see a Copy wget link section, copy that and turn it into a curl

command, because Windows doesn’t have wget

curl -O “https://download.splunk.com/products/universalforwarder/releases/9.4.1/windows/splunkforwarder-9.4.1-e3bdab203ac8-windows-x64.msi"

- Run the

curlcommand. Get the.msidownloaded, double click to run it as “Administrator”

- Verify the download by comparing the SHA512 hashes.

Our hashes match, so we’re good!

- Check to see if the DC is reporting back to the Splunk SIEM dashboard.

Success!

Generate some logs#

Now time for the fun part! Lets generate some logs…

We will perform some basic nmap scanning from our attacker machine to our Blue Team gateway address and see what events generate in Splunk.

- We will try a regular service scan with default scripts.

sudo nmap -sV -sC -Pn -T5 10.0.2.1

Our source (Attacker) IP is 10.0.5.3

Back to Full Series.

If you found this article helpful and want to support me in my content creation then buy me a coffee